So You Want a vector of Uninitialized Elements?

In ongoing work to parallelize evolution-of-multicellularity research software, we want to interface the cleverly-named serialization framework cereal with the matter-of-factly named Message Passing Interface. The basic idea is to take C++ objects, use cereal to turn them into bitstreams (plain Jane 0’s and 1’s), and then use MPI to send the bitstreams between compute nodes. However, the cereal interface is built around stream objects and the MPI interface is built around raw, contiguous memory (yay, void pointers ‘n sizeofs).

The class std::stringstream provides a workable, but potentially inefficient, solution.

First we would truck the data into a std::string using the .str() method.

Then, we would call .c_str() on the string to get a pointer to the beginning of a contiguous allocation containing our data!

The fundamental problem here is that std::stringstream doesn’t necessarily store data contiguously.

Implementations might, in fact, store data in disjoint chunks, and the interface reflects that fact.

So, to reduce unnecessary copying (for time and space efficiency), we want a stream (which cereal can talk to) that writes to contiguous memory we can then hand off to MPI.

@nateriz and I got to work and wrote a ContiguousStream class for the Empirical C++ library that does just that.

🔗 The Problem

Under the hood, our ContiguousStream implementation puts streamed-in data into a std::vector<char>.

That way, we can increase capacity in a happy modern C++-y way.

But, we do a little unnecessary work here: when we create the vector and each time we call .resize() on it C++ helpfully zero-initializes the fresh space.

(Translation: it runs through that memory and sets it all to zero).

This zero-initialization work’s not actually helpful for us because we only ever care about what’s in memory after we’ve streamed data into it.

(We don’t care about memory we’ve allocated but haven’t streamed to yet.)

If a user were to use fresh ContiguousStream for each output task (instead of .Reset()-ing an existing ContiguousStream), the amount of wasted zero-initialization work done would be roughly proportional to the amount of data written.

Yuck!

🔗 An Idea

We want a vector that doesn’t waste time zero-initializing members.

What to do, what to do?

We could write an entire vector implementation that doesn’t default-initialize elements when constructed with a size parameter or extended with .resize().

Andre Offringa actually did do this and wrote it up as a great blog post with extensive profiling results.

It’s an effective solution, but it’s also 1.2k lines of neither easy nor simple (and in this case, also copyleft-licensed ![]() ).

).

What if we just wrapped our data members in a struct that didn’t zero-initialize them?

For char (our target in this case) this looks along the lines of,

struct uninitialized_char {

char val;

// necessary to prevent zero-initialization of val

uninitialized_char() { ; }

// make implicitly convertible to char

operator char() const { return val; }

};

Then, we could just make a vector of those instead (e.g., std::vector<uninitialized_char>).

🔗 Will It Blend Compile?

Yes, it does.

Peeking at the intermediate representation std::vector<uninitialized_char> compiles down to, we can actually see the machine code for zero-initialization go away!

The Compiler Explorer snippet above compares three functions:

-

uninit: construct a 1024-bytestd::vector<uninitialized_char>, -

poser_uninit: construct a 1024-bytestd::vector<poser_uninitialized_char>, and -

init: construct a 1024-bytestd::vector<char>.

(There’s also some fancy footwork to prevent the vectors we’re interested from being completely optimized away.)

We can see that Clang generates shorter intermediate assembly for uninit than init.

Inspecting in greater detail, we can see a call to memset, a prime suspect for actually carrying-out zero-initialization, present in init but not in uninit.

It seems that uninitialized_char is working how we expect!

I also included the struct poser_uninitialized_char (hooked into poser_uninit) to illustrate the role of uninitialized_char’s custom constructor.

It appears that in poser_uninitialized_char (which uses a default constructor) the val member is still being zero-initializedd.

🔗 Is It on Fleek Performant?

Yes, it is.

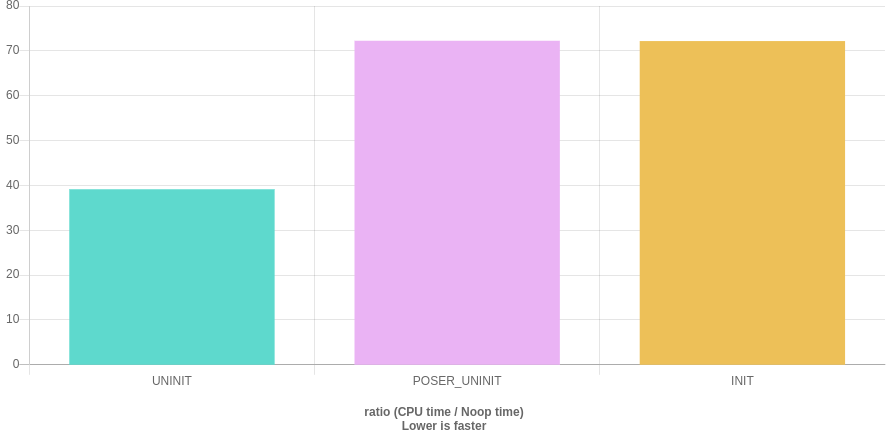

I benchmarked the impact of uninitialized_char char on 1024-byte vector creation using the nifty Quick C++ Benchmark web tool.

You can see a ~1.8x speedup when using std::vector<uninitialized_char> compared to vectors of raw char’s or the wrapper class lacking the custom constructor that eschews member zero-initialization.

The benchmarking code and results are live here.

🔗 Let’s Chat!

Questions? Comments? Concerns? Other ideas?

I started a twitter thread (right below) so we can chat ![]()

![]()

![]()

wrote a cute lil' blog post 🐶 on a cute lil' #cpp hack 😽 to make vectors go fast by skipping zero-initialization of contents

— mmore500 (@mmore500) December 12, 2019

I address key q's such as

1. will it b̶l̶e̶n̶d̶ compile

2. is it o̶n̶ ̶f̶l̶e̶e̶k̶ performant

uwu 🔜 https://t.co/B9mRtQDdJd